Published: November 20, 2024 at 8:22 am

Updated on June 09, 2025 at 7:03 pm

In today’s digital landscape, knowing how to read bot signals is essential for keeping your website’s SEO in check and avoiding unnecessary trouble. This piece explores the nuances of bot traffic, helping you tell apart the friendly from the foes. We’ll also cover how to spot these signals and manage your bot traffic effectively so that your site remains a beacon of visibility and trustworthiness in search engine results.

At its core, bot traffic consists of automated software programs designed to perform specific tasks online. Some bots are heroes—like those that help search engines index your content—while others are villains, wreaking havoc on your site’s integrity.

Search engine crawlers such as Googlebot and Bingbot work tirelessly to ensure your content is discoverable. Then there are SEO tools like AhrefsBot that analyze your site for optimization opportunities. Even bots monitoring site performance can be considered beneficial.

On the flip side, we have spam bots flooding comment sections with nonsense, scraper bots stealing your hard-earned content, DDoS bots attempting to crash your server, and malware distribution bots looking to exploit vulnerabilities.

Recognizing bot signals is crucial for differentiating between helpful and harmful traffic. Here’s what to look out for:

If you notice sudden spikes in traffic without any viral posts or influencer mentions backing it up, you might be dealing with some sketchy bots. These anomalies can skew your analytics data.

High bounce rates coupled with low session durations usually indicate non-human visitors. If they’re not engaging with your content, chances are they’re bad news.

Look out for spammy comments or leads from bizarre email addresses—these are classic signs of malicious bot activity.

Legitimate bots will identify themselves clearly in the User-Agent string; suspicious ones often use misleading identifiers.

Keep an eye on pre-listed bad IP ranges or repeated requests from the same IP address—they’re usually up to no good.

Knowing how to handle bot traffic is just as important as identifying it. Here are some strategies:

Use Google Analytics’ features to exclude known good bots from your reports so you can get a clearer picture of organic human traffic.

Add known bad bot user-agents to exclusion lists and employ advanced rate limiting techniques to catch non-human behavior early.

Some services use algorithms and machine learning models specifically designed to identify malicious activity; they could be worth looking into.

Excessive or harmful bot traffic can lead to penalties from search engines like Google, which prioritize quality over quantity when it comes to web content.

Bots can inflate numbers artificially, making it difficult for you to gauge real user interaction accurately—which could lead you astray in decision-making processes.

Too many bots can overload servers, slowing down sites—a definite no-no since speed is a ranking factor!

Understanding and managing bot signals is essential for maintaining a healthy SEO profile. By recognizing the differences between beneficial and harmful bots—and implementing strategies accordingly—you can safeguard your website against potential threats while ensuring optimal performance.

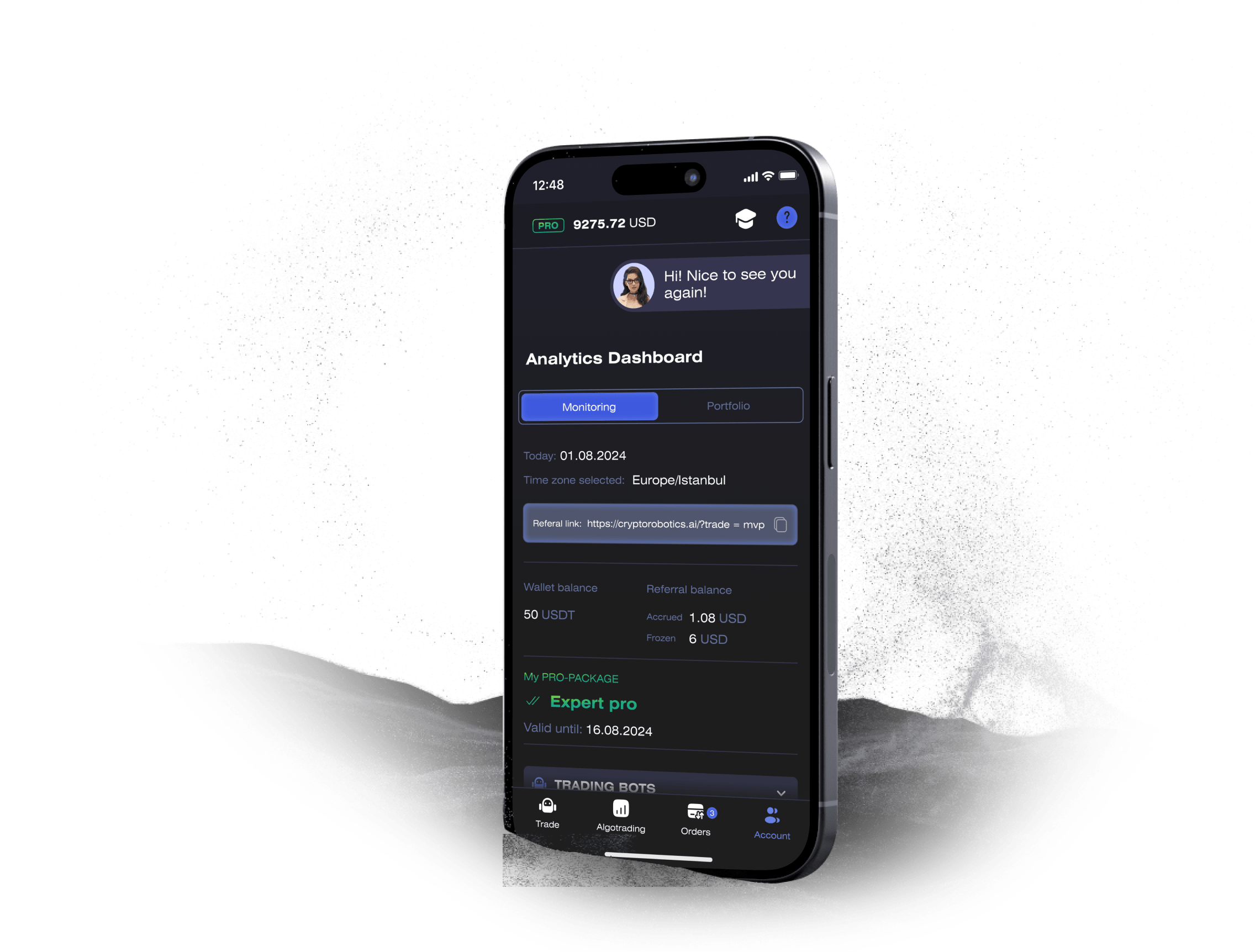

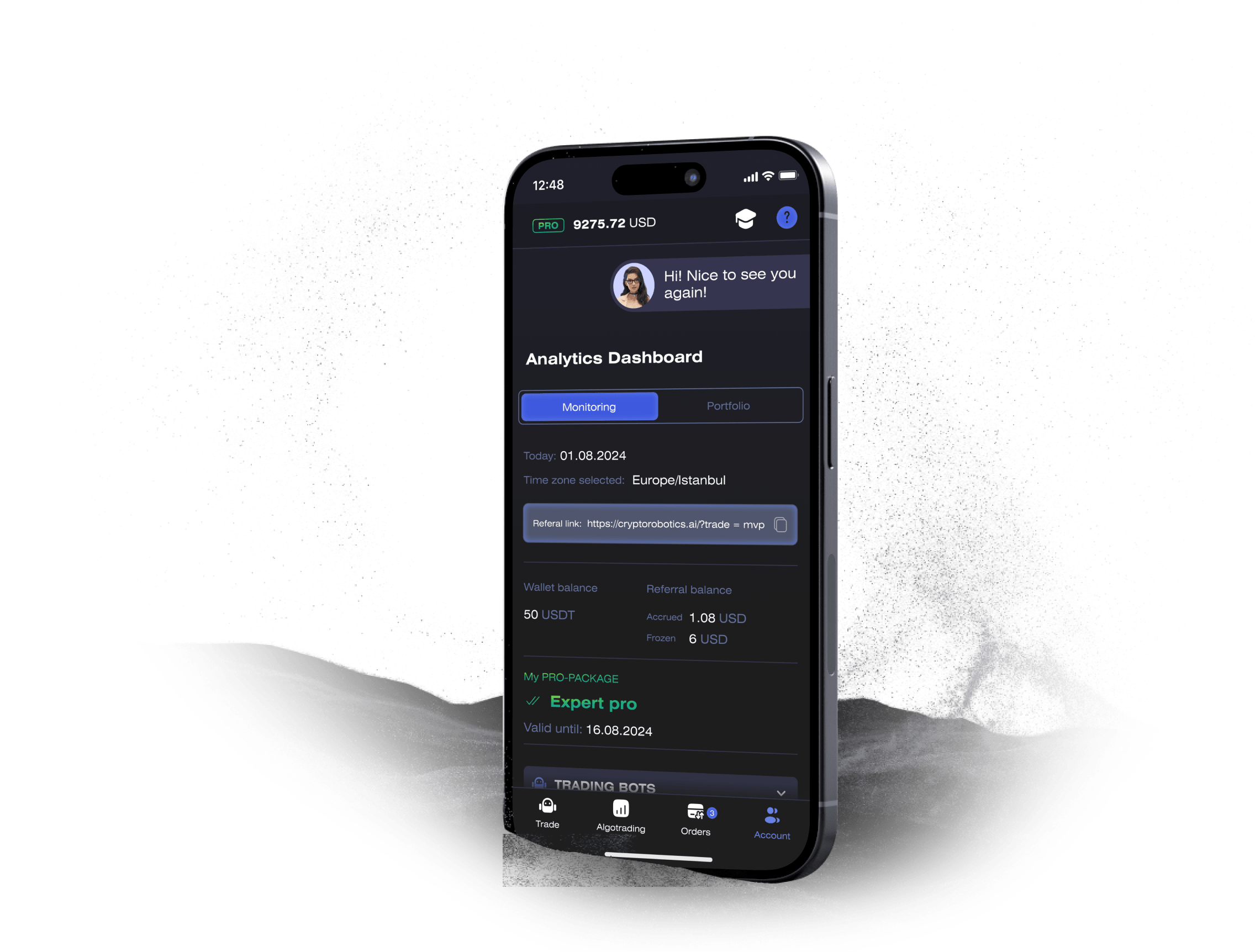

Access the full functionality of CryptoRobotics by downloading the trading app. This app allows you to manage and adjust your best directly from your smartphone or tablet.

News

See more

Blog

See more